Editor’s Word: The Escape Artist is a WebXR sport accessible now from Paradowski Artistic that includes strong assist for Quest 3 controller monitoring with button enter for interplay and teleportation. Whereas working to assist eye and hand monitoring on Imaginative and prescient Professional from the identical URL, technical director James C. Kane explores points round design, tech and privateness.

There are professionals and cons to Imaginative and prescient Professional’s whole reliance on gesture and eye-tracking, however when it really works in context, gaze-based enter looks like the long run we’ve been promised — like a pc is studying your thoughts in real-time to offer you what you need. This primary iteration is imperfect given the numerous legitimate privateness issues in play, however Apple has clearly set a brand new normal for immersive consumer expertise going ahead.

They’ve even introduced this innovation to the net within the type of Safari’s transient-pointer enter. The fashionable browser is a full-featured spatial computing platform in its personal proper, and Apple raised eyebrows when it introduced beta assist for the cross-platform WebXR specification on Imaginative and prescient Professional at launch. Whereas some limitations stay, on the spot international distribution of immersive experiences with out App Retailer curation or charges makes this an extremely highly effective, fascinating and accessible platform.

We have been exploring this channel for years at Paradowski Artistic. We’re an company staff constructing immersive experiences for high international manufacturers like Sesame Road, adidas and Verizon, however we additionally spend money on award-winning authentic content material to push the boundaries of the medium. The Escape Artist is our VR escape room sport the place you play because the muse of an artist trapped in their very own work, deciphering hints and fixing puzzles to seek out inspiration and escape to the true world. It’s featured on the homepage of each Meta Quest headset on the planet, has been performed by greater than a quarter-million folks in 168 international locations, and was Individuals’s Voice Winner for Greatest Narrative Expertise at this yr’s Webby Awards.

Our sport was primarily designed round Meta Quest’s bodily controllers and we had been midway by manufacturing by the point Imaginative and prescient Professional was introduced. Nonetheless, WebXR’s true potential lies in its cross-platform, cross-input utility, and we realized shortly that our idea may work properly in both enter mode. We had been capable of debut beta assist for hand monitoring on Apple Imaginative and prescient Professional inside weeks of its launch, however now that now we have higher documentation on Apple’s new eye tracking-based enter, it’s time to revisit this work. How is gaze uncovered? What would possibly or not it’s good for? And the place would possibly it nonetheless fall brief? Let’s see.

Prior Artwork and Documentation

Ada Rose Cannon and Brandel Zachernuk of Apple just lately printed a weblog submit explaining how this enter works, together with its occasion lifecycle and key privacy-preserving implementation particulars. The submit incorporates a three.js instance which deserves examination, as properly.

Uniquely, this enter gives a gaze vector on selectstart — that’s, remodel knowledge that can be utilized to attract a line from between the consumer’s eyes towards the article they’re wanting in the intervening time the pinch gesture is acknowledged. With that, purposes can decide precisely what the consumer is concentrated on in context. On this instance, that’s a sequence of primitive shapes the consumer can choose with gaze after which manipulate additional by transferring their hand.

Apple rightly sees this as extremely private knowledge that must be protected at a system degree and handled with excessive care by builders. To deal with these issues, eyeline knowledge is critically solely uncovered for a single body. This could forestall abuse by malicious builders — however as a sensible matter, it additionally precludes any gaze-based spotlight results previous to object choice. Likewise, native builders aren’t given direct entry to frame-by-frame eye vectors both, however they’ll a minimum of make use of OS-level assist from UIKit parts. We imagine Apple will finally transfer on this route for the net, as properly, however for now this stays a limitation.

Regardless of that, utilizing transient-pointer enter for object choice could make sense when the selectable objects occupy sufficient of your subject of view to keep away from irritating near-misses. However as a check case, I’d prefer to attempt growing one other major mechanic — and I’ve observed a constant concern with Imaginative and prescient Professional content material that we might assist deal with.

Locomotion: A Downside Assertion

Few if any experiences on Imaginative and prescient Professional enable for full freedom of movement and self-directed motion by digital area. Apple’s advertising emphasizes seated, stationary experiences, a lot of which happen inside flat, 2D home windows floating in entrance of the consumer. Even in fully-immersive apps, at greatest you get linear hotspot-based teleportation, with out a true sense of company or exploration.

A significant purpose for that is the dearth of constant and compelling teleport mechanics for content material that depends available monitoring. When utilizing bodily controllers, “laser pointer” teleportation has grow to be widely-accepted and is pretty correct. However since Apple has eschewed any such handheld peripherals for now, builders and designers should recalibrate. A 1:1 replica of a controller-like 3D cursor extending from the wrist could be potential, however there are sensible and technical challenges with this design. Take into account these poses:

From the attitude of a Imaginative and prescient Professional, the picture on the left exhibits a pinch gesture that’s simply identifiable — raycasting from this wrist orientation to things inside just a few meters would work properly. However on the proper, because the consumer straightens their elbow only a few levels to level towards the bottom, the gesture turns into virtually unrecognizable to digital camera sensors, and the consumer’s seat additional acts as a bodily barrier to pointing straight down. And “pinch” is an easy case — these issues are magnified for extra complicated gestures involving many joint positions (for ex: ✌️, 🤘, or👍).

All instructed, which means that hand monitoring, gesture recognition and wrist vector on their very own don’t comprise a really strong resolution for teleportation. However I hypothesize we will make the most of Apple’s new transient-pointer enter — primarily based on each gaze and delicate hand motion — to design a teachable, intuitive teleport mechanic for our sport.

A Minimal Copy

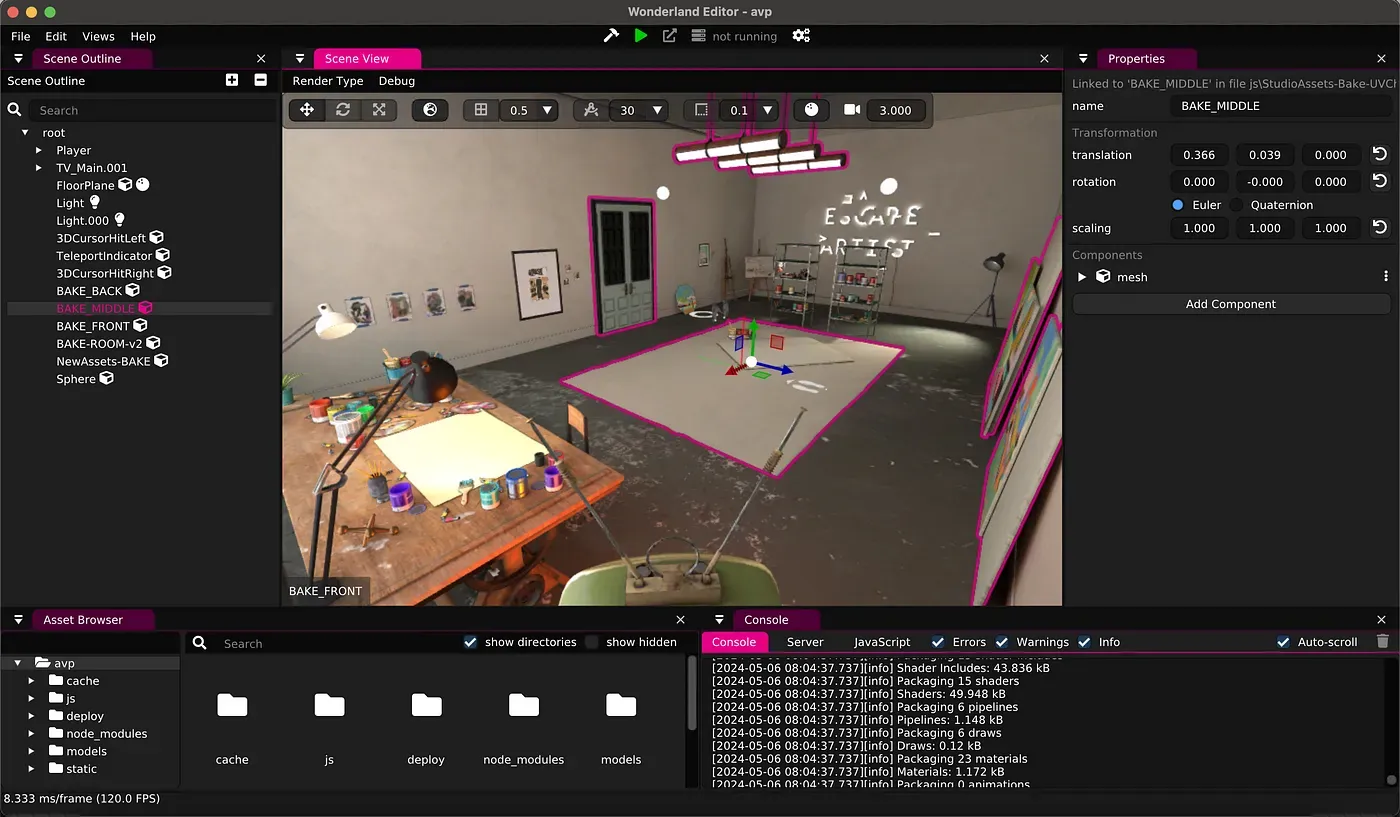

Whereas Apple’s lone transient-pointer instance is written in three.js, our sport is made in Wonderland Engine, a extra Unity-like editor and entity-component system designed for the net, whose scripting depends on the glMatrix math library. So some conversion of enter logic will likely be wanted.

As talked about, our sport already helps hand monitoring — however notably, transient-pointer inputs are technically separate from hand monitoring and don’t require any particular browser permissions. On Imaginative and prescient Professional, full “hand monitoring” is barely used to report joint location knowledge for rendering and has no built-in performance until added by builders (i.e. colliders added to the joints, pose recognition, and so forth). If hand monitoring is enabled, a transient-pointer will file in line behind these extra persistent inputs.

So, with that in thoughts, my minimal replica ought to:

- Hear for

selectstartoccasions and test for inputs with atargetRayModeset totransient-pointer - Cross these inputs to the XR session’s

getPose()to return anXRPosedescribing the enter’s orientation - Convert

XRRigidTransformvalues from reference area to world area (shoutout to perennial Paradowski all-star Ethan Michalicek for realizing this, thereby preserving my sanity for an additional day) - Raycast from the world area remodel to a navmesh collision layer

- Spawn teleport reticle, translating its X and Z place to the enter’s

gripSpacetill the consumer releases pinch - Teleport the consumer to location of the reticle on

selectend

The gist on the finish of this submit incorporates a lot of the related code, however in a greenfield demo venture, that is comparatively straight-forward to implement:

On this default scene, I’m transferring a sphere across the ground airplane with “gaze-and-pinch,” not truly teleporting. Regardless of the dearth of spotlight impact or any preview visible, the eyeline raycasts are fairly correct — along with basic navigation and UI, you’ll be able to think about different makes use of like a sport the place you play as Cyclops from X-Males, or eye-based puzzle mechanics, or looking for clues in a detective sport.

To check additional how it will appear and feel in context, I need to see this mechanic within the last studio scene from The Escape Artist.

Even on this MVP state, this feels nice and addresses a number of issues with “laser pointer” teleport mechanics. As a result of we’re not counting on controller or wrist orientation in any respect, the participant’s elbow and arm can keep a pose that’s each comfy and straightforward for digital camera sensors to reliably acknowledge. The participant barely wants to maneuver their physique, however nonetheless retains full freedom of movement all through the digital area, together with delicate changes instantly beneath their toes.

It’s additionally extraordinarily simple to show. Different gesture-based inputs usually require heavy customized tutorialization per app or expertise, however counting on the gadget’s default enter mode reduces studying curve. We’ll add tutorial steps nonetheless, nevertheless it’s doubtless anybody who has efficiently navigated to our sport on Imaginative and prescient Professional is essentially already accustomed to gaze-and-pinch mechanics.

Even for the uninitiated, this shortly begins to really feel like thoughts management — it’s clear Apple has uncovered a key new aspect to spatial consumer expertise.

Subsequent Steps and Takeaways

Growing a minimal viable replica of this characteristic is maybe lower than half the battle. We now must construct this into our sport logic, deal with object choice alongside it, and check each each change throughout four-plus headsets and a number of enter modes. As well as, some comply with up assessments for UX is perhaps:

- Making use of a noise filter to the hand movement on the teleport reticule — it’s nonetheless a bit jittery.

- Utilizing normalized rotation delta of the wrist Z-axis (i.e. turning a key) to drive teleport rotation.

- Decide if a extra wise cancel gesture is required. Pointing away from the navmesh may work, however is there a extra intuitive manner?

We’ll deal with all this stuff and launch these options partially two of this sequence, tentatively scheduled for early June. However I’ve seen sufficient — I’m already satisfied eye monitoring will likely be a boon for our sport and for spatial computing normally. It’s doubtless that forthcoming Meta merchandise will undertake related inputs constructed into their working system. And certainly, transient-pointer assist is already behind a characteristic flag on the Quest 3 browser, albeit an implementation with out precise eye monitoring.

In my opinion, each firms have to be extra liberal in letting customers grant permissions to offer full digital camera entry and gaze knowledge to reliable builders. However even now, in the proper context, this enter is already extremely helpful, pure, and even magical. Builders could be clever to start experimenting with it instantly.

Code:

transient-pointer-manager.js

GitHub Gist: immediately share code, notes, and snippets.